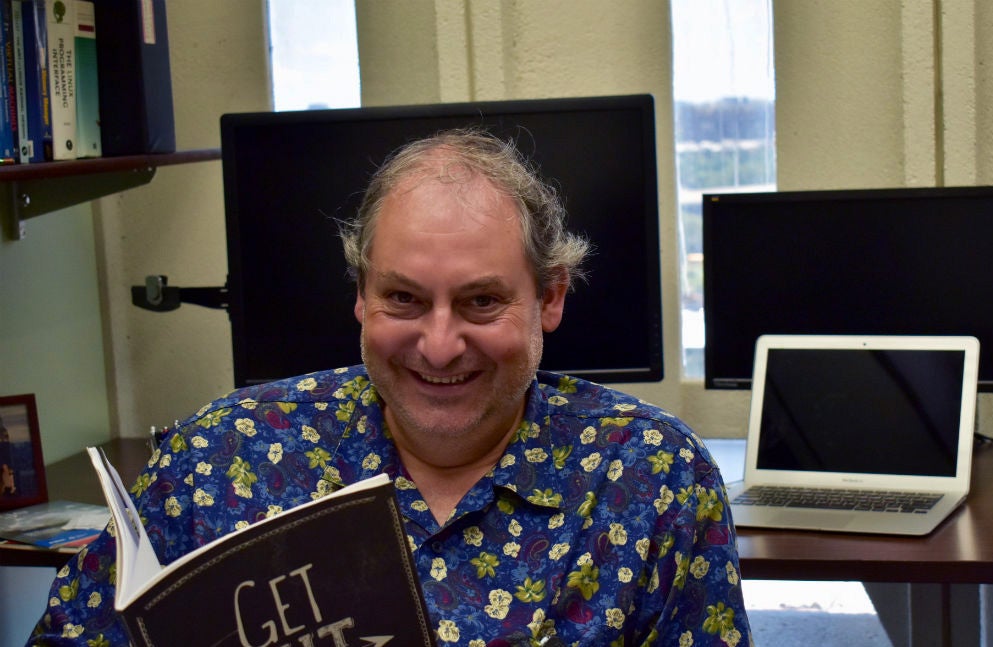

Associate Professor Mark Grechanik wins grant, best paper award for work on automating software integration tests

Associate Professor Mark Grechanik wins grant, best paper award for work on automating software integration tests Heading link

Computer Science Associate Professor Mark Grechanik is hoping to help companies increase the number of software integration tests they run, and simultaneously cut costs on the labor-intensive process. This novel approach examines frequently interacting components in software applications, and automatically creates integration tests for those parts of the functions that work together.

Grechanik is the recipient of a grant worth over $375,000 from the National Science Foundation (NSF) for the work, “SHF: Small: Automatically Synthesizing System and Integration Tests.” He is the sole-principal investigator on the grant, which began August 15 and runs three years.

Grechanik presented materials of the preliminary study that resulted in the NSF award at the 31st International Conference on Software Engineering & Knowledge Engineering in Lisbon, Portugal, in July, and he and his former PhD student Gurudev Devanla won the first place in the Best Paper Award category.

When a company has software they spend years building, they need to ensure it’s of a good quality, and they continuously run tests. Programmers who work for those companies work on different levels: those who write code, and those who test code. There are different levels of tests as well, small unit tests, which might look at the functionality of a single program; mid-level tests, called integration tests, that examine how different programs work together; and large acceptance tests, which may last several days and can get quite expensive.

Catching software bugs during integration is critical and running more tests at this phase is desirable, yet the least number of tests run are at this stage. If a defect is missed during integration testing and found later, the cost of fixing it can be five- to 20- times what it would have cost to rectify it earlier.

Grechanik has a long career working in industry and was a research manager at Accenture before joining UIC as a professor in 2012, and saw the real need for companies to increase the amount of integration tests they perform.

“When I sat down with friends and former colleagues to discuss the problem they drew a diagram of tests for an enterprise claim system with 10 million lines of code. They’re running 120,000 acceptance tests, and 500,000 unit tests, but the integration tests in the middle? Maybe 10,000. They would prefer more integration tests to be run than either the large or small tests, but they just don’t have the time and resources to create them,” Grechanik said.

Currently, programmers have to sit down together, discuss the codes they’ve written, and think through scenarios where issues might come up. This is time-consuming, and also very expensive to dedicate large chunks of programmer’s time to this process.

“If you have 100 functions and try to examine all the ways to combine them you can have millions of tests, it’s not scalable, it’s not feasible to try to come up with all the interactions manually, and many of those tests aren’t needed,” said Grechanik.

Grechanik and Devanla created ASSIST (Automatically SyntheSizing Integration Software Tests), which automatically finds the frequently interacting components to test these items. They experimented with three Java applications, and preliminary results indicate that ASSIST performed comparably with manually written, programmer-created tests. The work has been publicly released and Grechanik plans to put it in open source.

Grechanik is currently looking for two Ph.D. students to assist him with this work. He also works in security, simulation of complex cloud revenue management systems, and modeling different processes and optimization problems. Visit his website to learn more.